We often talk about data quality issues in terms of IT headaches, troubleshooting time, or frustrating reporting errors. But in industrial environments like oil & gas, chemicals, and manufacturing, the stakes are far higher. Here, bad data doesn’t just waste time. It threatens production, safety, and millions of dollars in revenue.

Let’s look at what happens when poor data quality causes a real-world failure.

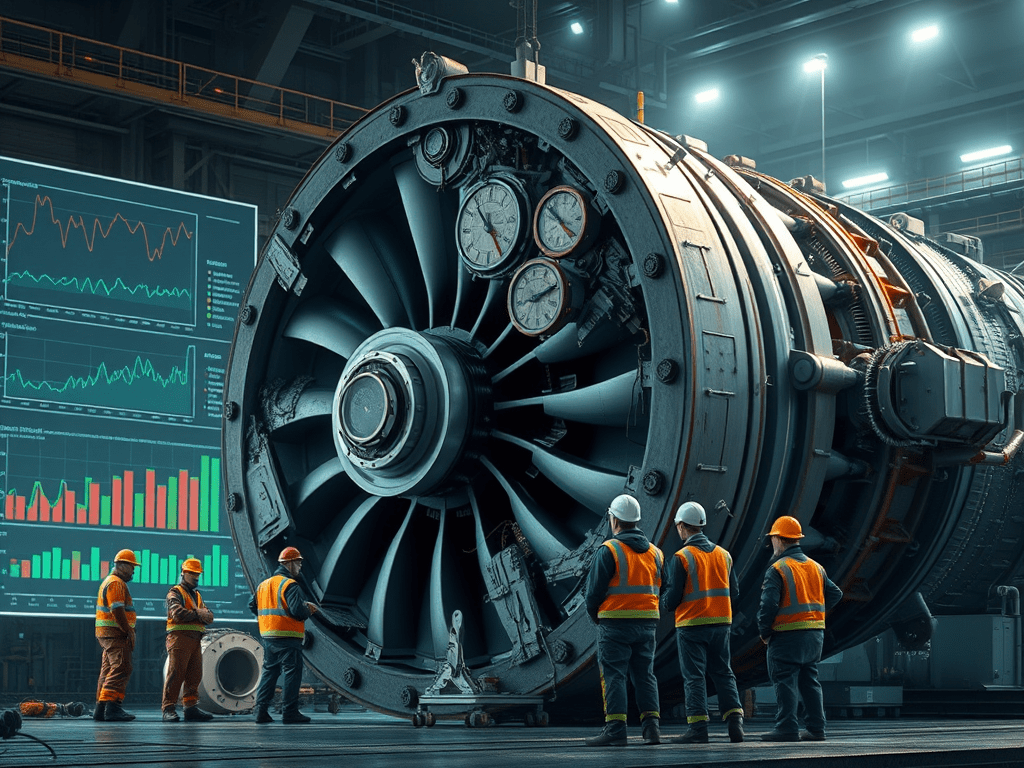

The Cost of a Turbine Failure: A $50M+ Mistake

Imagine a critical turbine at a mid-sized oil & gas facility goes offline. It’s a major piece of equipment, supporting one of the plant’s key process units. Replacing it, fixing associated damage, and bringing operations back online takes 3 weeks.

The business impact?

- Lost production: At 10,000 barrels a day and $85/barrel, that’s $17.85 million in lost revenue over 21 days.

- Equipment costs: A new turbine with installation easily runs $7 million.

- Labor and contractor overtime: Emergency maintenance adds another $1.5–$2 million.

- Regulatory penalties & contract hits: Expect $500,000 to $1 million in fines, penalties, or contractual shortfalls.

Total estimated cost: Over $26 million and that’s just for a 3-week outage.

Where Bad Data Comes In

Now what if this failure was avoidable?

In many cases, it is. And bad data is a silent accomplice.

Common culprits:

- Sensor drift or faulty readings going unnoticed

- Miscalibrated instruments sending incorrect process values

- Tag misassignments causing data to land in the wrong place

- Duplicate data skewing analytics

- Misconfigured AF models or incorrect limits masking early warning signs

- Poor documentation leaving engineers blind to context

Without high-quality, traceable data, early signals are easy to miss. AI-powered analytics won’t save you if the underlying data is flawed and worse, they might create false confidence or misleading insights.

Why This Matters for the Business

Bad data isn’t just an IT or engineering problem, it’s a business risk. In industries where uptime, safety, and regulatory compliance are non-negotiable, the financial exposure of poor data quality is massive.

Gartner estimates that data quality issues cost companies $12.9M per year on average.

For industrials, it’s often much higher because a single incident like this can easily surpass that in weeks.

What Leaders Should Be Asking

- Do we trust the data driving operational decisions?

- Can we trace where our data came from, and who changed it?

- Are we catching small issues before they become million-dollar outages?

- Is AI or even our AF Analyses built on reliable, clean, traceable data or is it amplifying problems?

Final Thought

In industrial operations, bad data can cost millions. It’s not just a matter of dashboards and KPIs, it’s turbines, pipelines, and people’s safety on the line.

If you’re serious about operational excellence and predictive insights, start with data quality, traceability, and lineage. It’s not optional. It’s mission critical.

Ready to See It in Action?

If your team depends on PI System data to keep your plant running safely and efficiently. Osprey is built for you.